Sound system

The sound system had not been touched much during the RISC OS Select development. For Phoebe, the machine which Acorn had intended to ship RISC OS 4 on, a new sound controller chip would have been used. The updated controller would have been more flexible, and would have introduced sound input as well as output. The source that drove the new controller chip was incomplete, and in any case didn't drive any real hardware, so was abandoned. There had not been any significant work done towards a redesign of the APIs to handle the new capabilities, either.

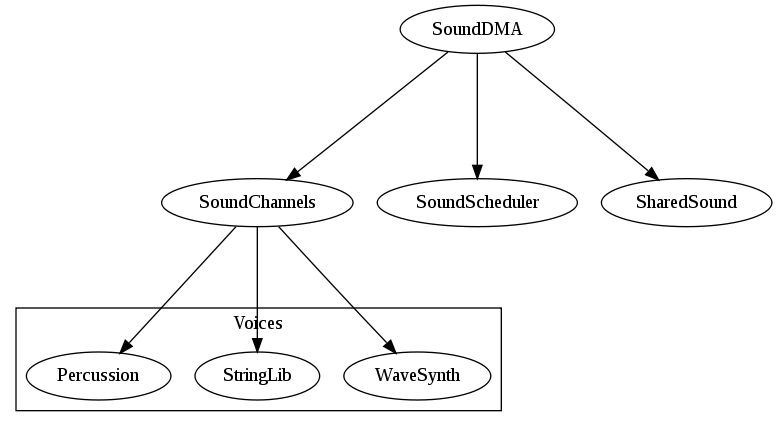

So, with this in mind, my intentions for the sound system were really to make it easier to extend and to provide support for sound input. The sound system is structured with 5 major components:

- SoundDMA (hardware, and raw interface).

- SoundChannels (manages the 8-bit voice handlers).

- Voice handlers (WaveSynth, Percussion, StringLib).

- SoundScheduler (scheduling of sound playback through SoundChannels).

- SharedSound (mixing for the 16-bit handlers).

The layering of the modules is, mostly, sensible. The systems had been made more resilient through Select development by ensuring that restarting the components left the system in a safe state. However, some of the detail was less appealing.

All the components were written in assembler, which is sensible for interrupt driven sound code, but which makes it harder to maintain. Writing a channel handler - one of the 8-bit synthesis modules - was never clear in the manuals, and often you were reliant on knowledge of how the SoundChannels module worked. The sound system was split between 8-bit logarithmic sound and 16-bit sound, with completely different interfaces for each. SoundChannels only supported the 8-bit logarithmic sound interface, so if you wanted a high quality synthesis module you had to provide a 16-bit handler.

SoundDMA only supported a single 16-bit handler, so in order to use multiple 16-bit sound sources you have to use the . In this regard, SoundChannels and are similar, but the similarity ends there. only supports registering sound handlers - where SoundChannels allows you to play a sound on a channel with a voice, only manages the registration and mixing of its sources.

The result is that you can play notes easily using the internal synthesis,

using the BBC SOUND commands in BASIC (and their related

system calls),but only through the 8-bit logarithmic interface. If you want

a high quality voice, you do not have any control.

SoundChannels is limited to associating 32 voices at any time, which can make it more difficult to use in a dynamic environment. Whilst the number of channels could be increased, it was still limited to only 8 channels at any time.

Looking further down the interface, the SoundDMA module had a number of functions. It provided the programmers interface, for registering the SoundChannels and SoundScheduler handlers, and registering a 16-bit sound handler which was used by . It also provided the hardware interface used by these components. When running on 8-bit logarithmic hardware, the 16-bit interface was not supported - I had written a module to allow the interface to be emulated previously (see the earlier 'Patches at a lower level' ramble), but it wasn't supported in SoundDMA itself. When running on 16-bit sound hardware, the 8-bit logarithmic interface was emulated, and mixed with the 16-bit sound data.

I am ignoring the very high level 'ENVELOPE' support of

the BBC, which was not provided by any supplied components. It had been

supported by third parties in extension modules, but is more of a

historical curio than a useful interface.

MIDI support was available through a separate module, but I omit this from my discussion here mainly because I am less familiar with it. If there had been any further work done on the sound system the MIDI system should have been taken account of, and provided as a sibling to SoundChannels.

Third parties had also supplied some of the high level events with their software. Computer Concepts had supplied an AudioManager module which allowed sounds to be associated with events like applications loading, or windows closing. Nothing was supplied as part of the core system. Acorn had a module with a similar aim - SoundFX - which we could use, but it was designed to work with the NC browser system. It could probably be updated to handle regular Wimp events and services, though.

It was easy to see what the goals needed to be - but quite hard to make them happen, because any changes had to remain backward compatible. The major areas of interest with the sound system can be summed up easily:

- Only stereo output is supported - there is no support for greater numbers of outputs, or any way to easily extend the system to do so.

- Only a single device is supported - there is no way to provide extra sound hardware to output to different outputs.

- The dual (or triple) nature of SoundDMA means that even if you're just providing support for one hardware configuration, you need to provide a lot of common code to support configurations and interfaces.

- Sound voices are limited to being 8-bit logarithmic if you want to

use the normal synthesis interface (

SOUND). - Sound synthesis is limited in channels and voices, which makes sharing the system within the desktop more difficult.

- Sound input is not catered for at all - this is a large area in its own right.

- Higher level interfaces within the desktop, such as sounds on given events, are not catered for at all.

For the lower level issues, SoundDMA needed to be reworked to separate its functionality. Instead of providing hardware access, and configuration and registration of handlers, it should separate off the hardware part to dedicated modules. Most likely the hardware part would become a new module SoundHardware_VIDC, with other hardware modules named similarly. The hardware would deal purely with 16-bit data. 8-bit interfaces would be deprecated, to the point that the hardware driver would probably convert from 16-bit data to 8-bit data if only 8-bit was supported. I like to continue supporting interfaces, but the 8-bit logarithmic sound interface would be provided by no hardware other than VIDC - it would be pointless to retain the support for such aged hardware.

The SoundDMA configuration and registration interfaces would become SoundSwitcher, or maybe SoundManager (or some other label which names it more appropriately for the new task). The manager would handle the registration of hardware drivers, which could provide interfaces for more than the stereo channels. Hardware drivers would describe themselves in terms of the channels they provide, and the interface would provide an internal name and a human readable name which could be used in interfaces.

Multiple hardware devices would be able to be registered, and new interfaces created to target the devices. The 'default' device would be selectable from these devices, to allow clients that used legacy interfaces to function. The SoundChannels module would need to be reworked significantly in order to function in the new environment. 8-bit voice handlers would still be supported, but would be converted to the 16-bit data format. New 16-bit voice support would be added, allowing the voices (in the old sense) to be higher quality.

A new voice generator would be created for sampled data, allowing a sample (in a variety of formats) to be associated with a voice in the same way as the regular channels. This could be in a similar way to WaveSynth (through instantiation) or by dedicated support. The number of voices known at any time would be increased from 32 to allow far more voices to be known - not all of the voices may be loaded at the time, and voice handlers may implement on-demand loading of sample data.

Depending on the implementation, sound formats should be supported independently of the sample manager. This was partially provided for by the work that went into !Replay for different file formats. Unfortunately, much of this was unsupported for use outside of !Replay. I did quite a bit of investigation with the sound playback modules, based on sketchy documentation, guesswork and disassembly.

The SoundFile module provided a quite well thought out and extensible interface which allowed different file formats to be played. At the time, Acorn had taken a different route for supporting different hardware. Instead of using different hardware driver modules, they reused the drivers provided as part of !Replay.

A new interface to play sounds by voice name should be considered, although this goes against the existing interfaces for sounds. The place which SoundScheduler has within the sound system should be reconsidered - it hasn't been widely used and may not provide a useful interface. Aside from !Maestro, I don't think it has been used to any good effect. Sibelius has long gone from the RISC OS market, and professional sound work on the systems is not a priority.

Interfaces for system events would need to be available, so that high level events could be associated with sounds. Ian Jeffray had proposed a sound scheme implementation and we had been trialing it to see how it might work in general use. This had been progressing well, although there were more things that needed to be resolved - the events that were triggered needed to be structured and extensible, which would allow applications or modules to provide named notifications. Notifications could be configured individually or in groups, allowing a scheme to be built up. The Alerter protocol, mentioned above, would have dovetailed into this work quite nicely.

A simpler interface for streaming sound should also be provided. John Duffell had written a very useful SharedSoundBuffers which allowed a user application to feed data to the sound system. This allowed a lot of very simple sound applications to work by streaming filling buffers in the foreground, whilst the module drained them into the sound system. This could be extended to allow other streams, and possibly on-the-fly conversion of data formats.

Sound input is an area that needed more thought. Some of the interfaces that had been created for Phoebe were still quite limited, but they might have provided a good basis for further work. Essentially the sound input APIs need to support different hardware (which may be the same hardware as provided the sound output) and needed to be able to be configured in a similar way. The same configuration parameters as were used for the sound output (volume, sample rate, number of channels) would be suitable for use with the input, so it would make sense for any configuration interface to match.

For input, a module like SharedSoundBuffers would be useful to bridge the divide between user application and the sound input. Unlike the sound output, sound input focuses more on user applications - either because they are recording to a file, or because they are encoding and transmitting to another machine for playback. The interfaces have to therefore be designed to allow user mode access relatively easily.

Front end applications would be required for all these features - controlling the volume, mute and possibly even channel mapping (eg swapping left and right channels). Some hardware might provide effects through its channels, which could do with an interface; I am thinking of the common filters that can be applied for different environments, or to simulate other environments.

I had begun on a rewrite of SoundDMA, but it was halfhearted and misdirected. I knew I needed a separate implementation of SoundDMA, and I wanted the configuration interfaces to be implemented separately from the hardware access. As such, it made more sense to use a high level language. I had become interested in providing greater support for compiled languages other than C. The CFront tool provided a way in which C++ (albeit to quite an old standard) could be compiled, and it seemed reasonable that other languages be supported in a similar manner.

I had ported, and been testing, a Pascal to C conversion tool, and it had been working reasonably well. The next step, obviously, was to build a useful module written from scratch in Pascal - and thus it was that the replacement SoundDMA was created. The major configuration and registration interfaces were all implemented, and the module was able to be loaded in place of the normal SoundDMA without any bad effects - except that it didn't do anything useful with the data.

It was really just an exercise to prove that you could write reasonably complex components in a language other than Assembler or C (albeit the code would be compiled with the C compiler). As an exercise, it was successful, but it didn't really progress the work towards creating a new module. Whilst it would have been interesting to announce that modules were now able to be written in Pascal, the language does not offer much in the way of benefits over writing in C.

One of the useful hardware devices that could be provided separately would be the USB audio interface cards. These are relatively plentiful now - at the time I investigated, they were quite rare. The audio specification for USB is complicated, and there are many different ways that the devices can present themselves. As with other types of hardware, the USB sound devices can provide numerous controls which can manipulate the sound prior to its output (or input).

The USB sound devices provide descriptions of the sound output, with different processing units present to handle different effects - including common things like volume, bass and treble controls. The controls that the USB device provides may operate on single streams or on collections of streams, such as a stereo pair of channels (or more, if more channels are supported).

Not only would the greater number of channels need to be supported, and supported in a generic way, but each of the controls may need to be managed separately. There is no reason why the overall volume should not be controlled by the hardware, rather than scaled in software. Doing so would improve the quality of output at low volumes.

USB uses Isochronous transfers to deliver its data, which is not supported by the Simtec USB stack and, due to the design of the Pace stack, would be inefficient using that stack. USB audio data itself can come in many formats, which the devices will describe. The usual linear data format or a logarithmic representation could be used. Additionally, formats such as MPEG audio could be supported by the USB device.

Whilst having these formats supported by the hardware is useful, it is more difficult to mix them with other input, so it might be more difficult to use them in a general RISC OS system.

Disclaimer: By submitting comments through this form you are implicitly agreeing to allow its reproduction in the diary.